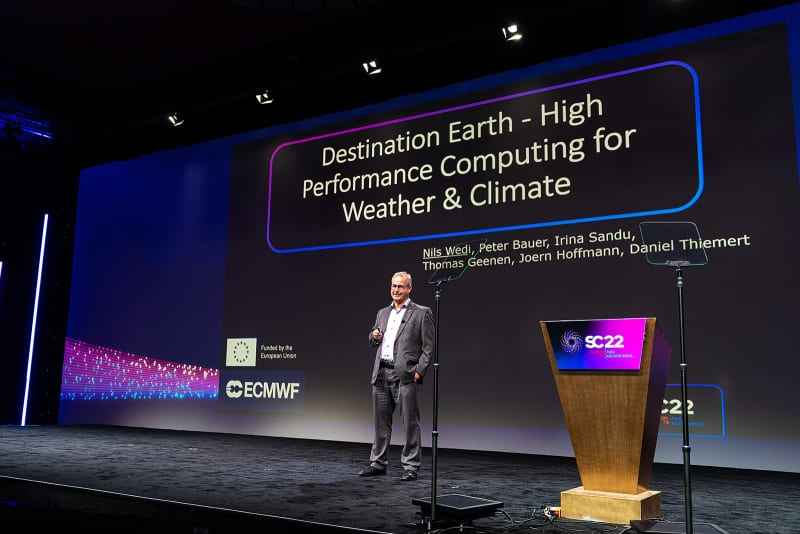

Invited by the SC Conference Series, the world’s reference event in high-performance computing (HPC), the Digital Technology Lead for Destination Earth at ECMWF Dr Nils Wedi introduced Destination Earth’s digital twin deployment plan. Dr Wedi provided an insightful overview on the role of some of the largest HPC systems in Europe and how the European Centre for Medium-Range Weather Forecasts’ advanced Numerical Weather Prediction approach led to the quest for a new generation of weather and Earth system simulations.

Destination Earth (DestinE) needs intensive use of high-performance computing to run the highly complex simulations of the Earth system at the very high resolution of the digital twins, quantify uncertainty of the forecasts and perform the complex data fusion, data handling and access operations that the system will make available. These are some of the key messages that Dr Nils Wedi delivered to the audience of the SC22 Supercomputer conference in Dallas during one of the 8 ‘Invited Talks’ of this edition, on 17 November 2022.

As an example, Wedi estimated that DestinE’s digital twins will ultimately result in 352 billion points of information and 1 petabyte of data a day to produce simulations at about 1.4 km horizontal resolution. For comparison, ECMWF’s current model produces 9 billion points and 100 terabytes a day for simulations at 9 km.

Dr Wedi explained the agreement between DestinE and the EuroHPC Joint Undertaking (EuroHPC JU) that has granted access to some of the largest pre-exascale systems in Europe. Two of them, LUMI and Leonardo, have been announced to take 3rd and 4th place on the TOP500 list (top500.org) during the SC22 conference (ahead of Summit, successfully used by ECMWF in the past through the INCITE programme). Every year during the SC conference, the TOP500 is released as one of two yearly updates to the list, launched in 1993, that ranks the 500 most powerful computer systems in the world.

- LUMI, hosted by Finland’s CSC IT Center for Science, with a measured Linpack performance of 309.1 (428.7 peak) petaflops per second.

- Leonardo, hosted by Italy’s Cineca, with a Linpack performance of 174.7 (255.7 peak) petaflops per second.

- Mare Nostrum 5, hosted by the Barcelona Supercomputer Center, with an estimated peak performance of 314 petaflops per second.

Linpack performance is determined by running a standard benchmark code on the entire system to exploit the maximum available computing power of such machines. However, real applications with a range of algorithmic patterns like digital twins need substantial investments in fundamental software development and adaptation to use as much as possible of this capability – hence ECMWF’s investment.

EuroHPC investment includes also other smaller platforms throughout Europe. By 2024 it is expected to operate DestinE on some high-end supercomputers with a performance of up to 1 exaflop per second. “And there’s of course more coming,” said Nils Wedi.

“We need to build workflows that are resilient and generate reproducible results also on machines that will be emerging in the future, as for example the big exascale computer in Jülich, Germany, or the Alps machine to be deployed in Switzerland that are exciting venues where these workflows could operate in the future.” he said.

Distributed computing resources

As the COP27 advocates for addressing “existing gaps in the global climate observing system, particularly in developing countries, and recognises that one third of the world, including sixty per cent of Africa, does not have access to early warning and climate information services” Dr Wedi pointed out the need and opportunity to address this by balancing the grossly uneven distribution of computing resources across the northern and the southern hemispheres, as has also recently been pointed out at the World Meteorological Organization (WMO). A system such as DestinE could contribute to the required information services if the necessary investments are made first in developing countries which are often on the frontline of the consequences of climate change.

During the 45-minute talk Nils Wedi also mentioned the Center of Excellence in Weather and Climate Modelling, opened with Atos, as one of the HPC-related activities within ECMWF, giving some examples of the tight cooperation between the Centre, Academia and some of the key actors in GPU acceleration such as Nvidia.

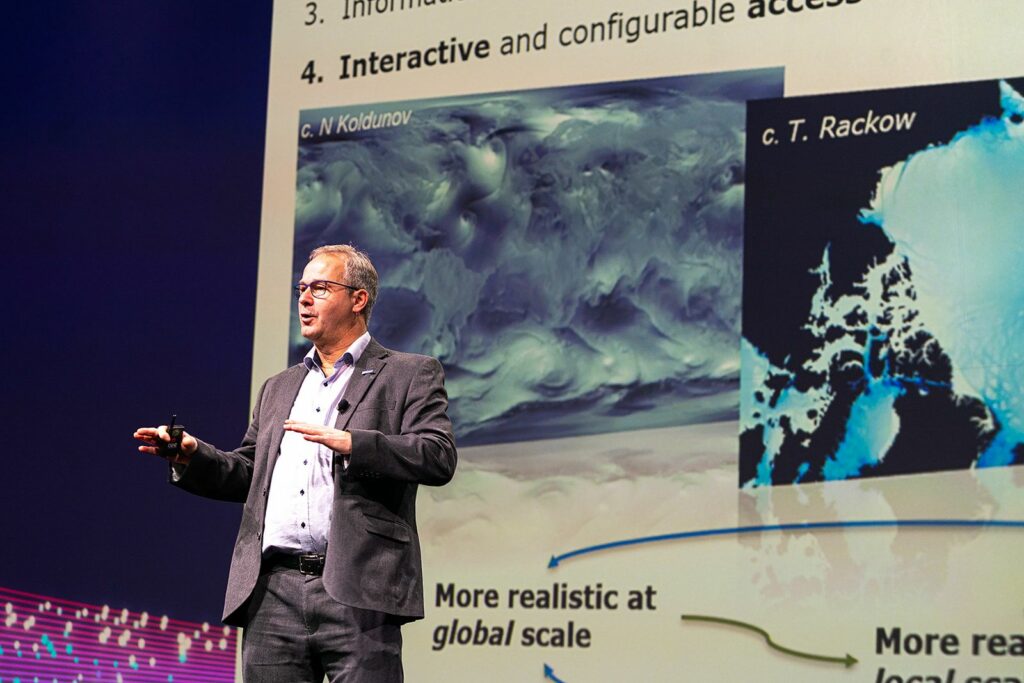

The Digital Technology Lead for DestinE at ECMWF also detailed some of the technological challenges to make the most of HPC computing capacity and the demanding science aspects to represent the global Earth-system physics as realistically as possible so that the impacts of climate change and extremes are reproduced at scales that matter to society.

Nils Wedi’s talk sparked a lively interest from the audience with a round of questions until the session timed out, ranging from how DestinE can integrate into smaller regional models to how it will convince climate-skeptics.

Managing vast amounts of data in the cloud

One attendant in the room asked what it will mean for the networks to distribute the 1 petabyte of data produced daily by the digital twins and remarked that network technology also evolves. Dr Nils Wedi explained how data compression and data reduction will remain absolutely key for DestinE.

“That’s why we are creating an elaborate data management plan. The 1petabyte is only on the HPC, it’s all the information that will be produced. The different applications will not all need that 1 petabyte, everyone needs their own slice from this. We need to parallelise the access and extract the user specific information so that we can extract targeted content from this information.”

“Think of it like a big digital observation platform: it continuously produces information but not everyone needs everything from these observations. Also, if you are not ‘listening’ to it, it might be gone at some point. We are not preparing for archiving all these petabytes of data. We are preparing for the system to continuously produce and reproduce. Then you listen to it, you capture and collect what you need, and that will already be substantially less than one petabyte.”

“So in terms of network we are thinking of an immediate reduction of several hundreds of gigabytes and then flowing out we are thinking of terabytes streaming to the core service platforms and the rest of the infrastructure. There will be an exponential reduction of the data flows as you go further down the line.”