The Climate Change Adaptation Digital Twin of the EU Commission’s Destination Earth initiative (Climate DT) is a step change in the way information on climate change is provided, aiming to help face the complex challenges posed by our changing climate. The Climate DT represents a pioneering effort to produce operationally multi-decadal climate projections. It leverages the latest scientific and technology advances, including the unprecedented computing power provided by first pre-exascale supercomputers in Europe of the European High Performance Computing Joint Undertaking (EuroHPC JU). The Climate DT will provide regularly updated, globally consistent Earth system and impact sector information at scales of a few kilometres, where many of the impacts of climate change are felt. It will thus reduce the update frequency of the cycles of climate projections from 7-10 years now to yearly or less. At the same time, it is setting a unique capability to produce bespoke cutting edge numerical simulations addressing ‘what-if’ questions related to the impact of certain scenarios or policy decisions on the evolution of our planet. The Climate DT projections – from global to local scale, for several decades ahead – will enable decision-making in support of climate change adaptation and the implementation of the European Green Deal.

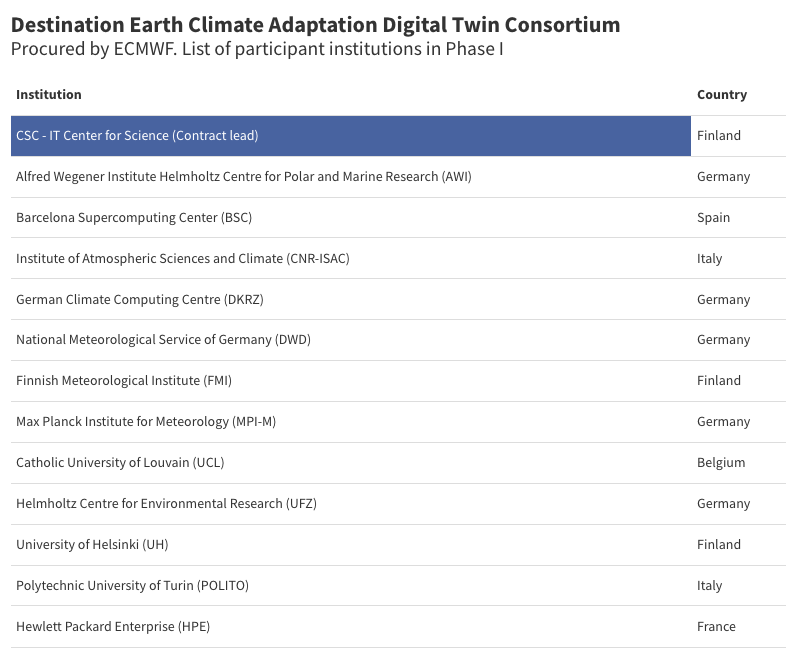

Let’s take a closer look at this innovative system, developed by a wide partnership consortium led by CSC – IT Center for Science, through a contract procured by the European Centre for Medium-range Weather Forecasts (ECMWF) in the framework of the Destination Earth initiative funded by the European Commission. This partnership involves leading climate centres, supercomputing centres, national meteorological services, academia and industrial partners from six European countries.

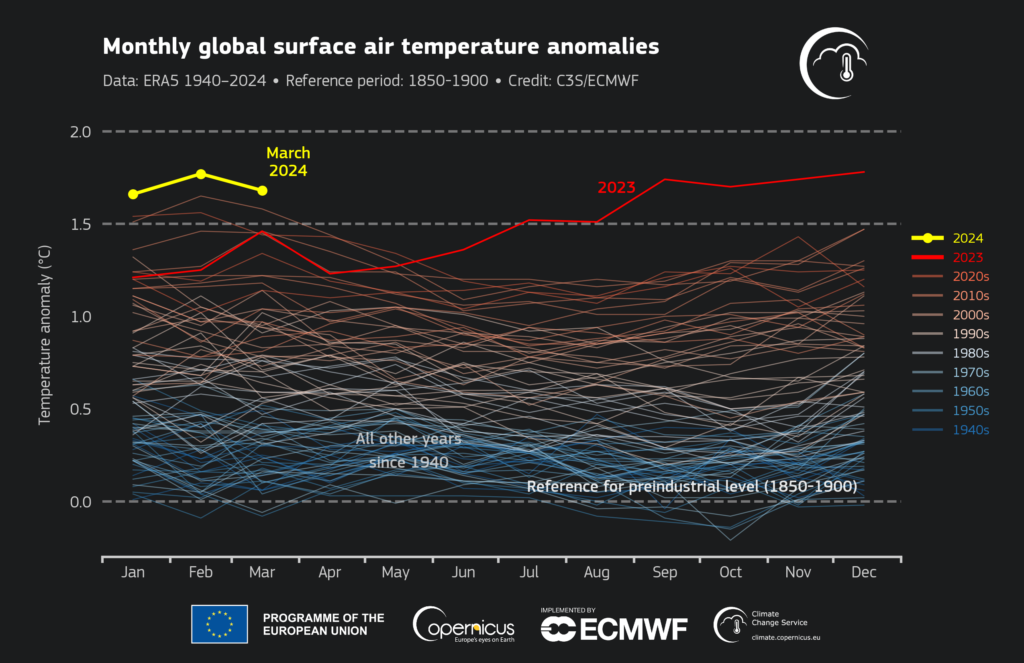

Recent years, and especially the last months, have shown the extent at which the climate crisis is amplifying. We have entered uncharted territory. The Copernicus Climate Change Service (C3S) ERA5 reanalysis dataset shows that global mean temperature in 2023 was the highest since the start of the records (1940), and we have experienced a 12-month period with an average global mean temperature above 1.5ºC compared to the pre-industrial era.

In other words, we are increasingly getting closer to the 1.5ºC global warming limit set in the Paris Agreement. At the same time certain extreme weather events are also becoming more frequent and intense, with often catastrophic impacts. This fast-evolving situation motivates enhancing our capabilities to better respond and adapt to the environmental challenges in a climate change context, beyond what can be currently done with the existing sources of climate information.

This is one of the main goals of the ambitious Destination Earth initiative of the European Commission (DestinE), which aims at developing a digital replica of our planet, with digital twins simulating different aspects of the Earth system. This new type of information system, with enhanced detail, quality, consistency and interactivity, also allows us to address relevant ‘what-if’ questions related to the Earth-system.

ECMWF and its partners from 90 institutions across Europe implement the first two, high-priority digital twins of DestinE on Weather-induced Extremes and Climate Change Adaptation (Climate DT) as well as the Digital Twin Engine, an innovative software architecture supporting running the digital twins and interacting with them and their data. The delivery of the digital twins critically relies on the strategic partnership with the EuroHPC JU, that provides special access to its supercomputers, since DestinE is considered a strategic initiative for the European Union. This partnership allows to translate Europe investments in pre-exascale supercomputers into benefits for the society.

Destination Earth’s Climate DT will provide a new capability to determine the impacts of scenarios or policies on the climate system and relevant impact-sectors, at global, regional, and local scales several decades ahead using cutting-edge numerical simulations.

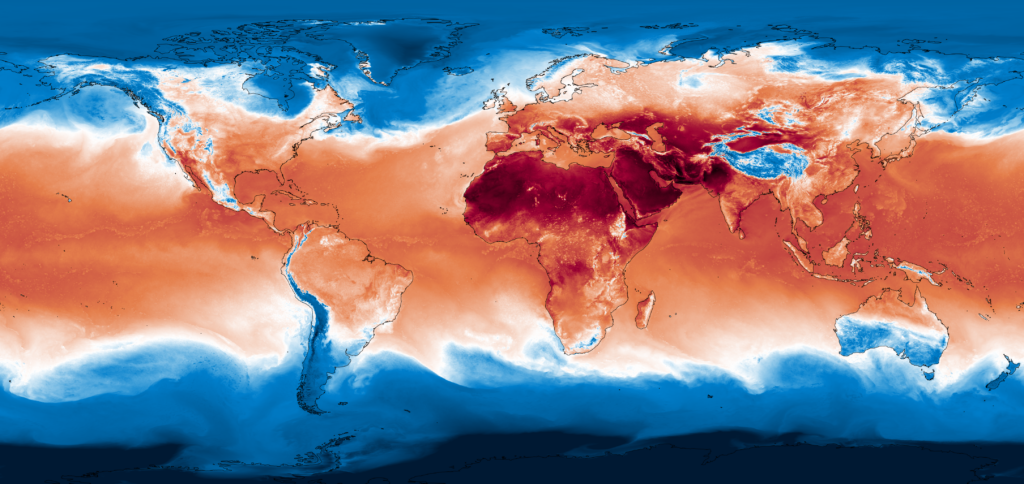

A satellite image of the future: a simulated satellite image for a period of 100-days (1 May to 8 August 2032) derived from one of the prototype projections of the Climate DT with IFS-NEMO (at 4.4km resolution for the atmosphere and land, and 1/12 degree for the ocean and sea-ice), performed on the EuroHPC LUMI supercomputer. Such level of detail obtained now with km scale climate simulations was so far only available from weather prediction models. Credit: ECMWF / Andreas Müller / LUMI

Importantly, these projections will be updated regularly (at least on a yearly basis, for example using improved models, different scenarios), and on demand to address specific ‘what-if’ questions. This will change the level of support currently available to design adaptation measures by, for example, assessing climate impacts under several policy-relevant scenarios, or creating dedicated storylines of how recent extreme events with high impacts on climate-vulnerable sectors, have been impacted by climate change and may unfold in different future climates.

The Climate DT is implemented by a strong partnership led by the CSC-IT Center for Science, through a contract procured by ECMWF, which started in October 2022.

The teams involved in the Climate DT have been working intensely in close collaboration with teams at ECMWF to develop the key components of the Climate DT, deploy them on the EuroHPC LUMI system, the first pre-exascale computer in Europe, and perform the first ever prototype projections at 5km for a couple of decades.

Stay tuned for more information on the key achievements of the Climate DT during the first phase of DestinE, which ends in June 2024 and the outlook for the second phase of the initiative (June 2024 – June 2026). The European Commission will mark the launch of DestinE with a high-level event held at LUMI in Finland on June 10 2024.

Climate DT: main components and features

The long-term vision of the Climate DT consists of providing a source of novel high-resolution global climate information for both the present and the future giving end users the possibility to assess the effectiveness of different adaptation strategies.

The Climate DT is designed for the continuous production of climate data and projections, ensuring that high-quality, up-to-date information is always readily available. It is also designed for on-demand production, allowing to rerun the global models to address specific “what-if” questions, or even to “insert” twins-within-the-twin for specific aspects.

In practice, the Climate DT relies on: global km-scale climate models and flexible workflows, including directly linked impact models. Key features include an operational configuration that includes monitoring and evaluation, and interactivity elements, allowing to enhance the responsiveness to emerging user needs. The recent breakthroughs in Artificial Intelligence (AI) will allow boosting the interactivity of the Climate DT, in particular through the use of tailored chatbots to ease the access to the newly produced climate information, and the use of Machine Learning models to enhance uncertainty quantification.

The implication of selected users right from the start of its conception, following DestinE’s co-design strategy is key to ensure that the main recipients obtain the climate information they need.

Km-scale, global storm-resolving eddy-rich climate models

The Climate DT employs a global km-scale modelling approach, characterised by several innovative aspects. The km-scale models aim to improve the representation of critical processes and reduce climate model biases, to enhance the overall trustworthiness of climate projections. They provide consistent climate information with local granularity on a global scale, bridging the gap between large-scale climate projections and local climate impacts and avoiding data gaps and inconsistencies that come with existing regional downscaling efforts.

The models underpinning the Climate DT incorporate atmosphere, land, ocean and sea-ice components. They are building on some of Europe’s leading weather and climate models, which are now adapted to km-scale resolutions in close sync with other initiatives (e.g., the Horizon 2020 nextGEMS and Horizon Europe EERIE projects and the Warm World project in Germany), through a truly European collaborative endeavour.

- IFS/NEMO has been developed starting from the most recent version of the ECMWF Integrated Forecasting System (IFS) atmospheric/land model coupled to the latest release of the NEMO model implemented by the Barcelona Supercomputing Center in collaboration with ECMWF.

- IFS/FESOM also employs most recent version of IFS coupled to FESOM – one of the first global mature sea ice-ocean models formulated on unstructured meshes. Finite-VolumE Sea ice-Ocean Model (FESOM) is a development of the Alfred Wegener Institute (AWI).

- ICON, a flexible, scalable, high-performance modelling framework for weather, climate and environmental prediction. The ICOsahedral Non-hydrostatic model has been jointly developed by the German Weather Service (DWD), Max-Planck-Institut für Meteorologie, (MPI-M), Deutsches Klimarechenzentrum (DKRZ), Karlsruhe Institute of Technology (KIT) and the Center for Climate Systems Modeling (C2SM).

The Climate DT leverages some of the world’s most powerful pre-exascale HPC systems, those of the EuroHPC JU, a task that presents significant challenges but offers unparalleled opportunities (e.g., exploiting the km-scale in multi-decadal climate projections). This involves a continuous substantial effort to adapt and optimise the climate models to the pre-exascale European HPC platforms, to make efficient use of heterogeneous architectures, using both general-purpose and accelerated processors, and the corresponding data-handling capacity. This is an absolute requirement to efficiently perform kilometre-scale global simulations (with a target of 5 km) on a multi-decadal timescale on HPC systems distributed across Europe.

Operational projections using flexible workflows

Developing a digital twin of the complex Earth-system delivering operational multi-decadal projections requires strictly following best practices in software engineering.

These include the generalised use of continuous integration and delivery, agile software development methodologies, best code structuring and documentation practices, performance analysis and performance portability.

Autosubmit, the workflow manager solution used in the Climate DT on the EuroHPC platforms, can create and manage workflows, which orchestrate different elements of the Climate DT and allows to incorporate an arbitrary number of data consumers.

The Climate DT employs flexible workflows for the operational (regular) production of climate projections, embedding selected applications. The length of CMIP (Coupled Model Intercomparison Project) cycles is significantly reduced, from 7–10 years to just a year and less, enhancing the responsiveness to emerging policy requirements and user needs.

In times of transformative change in the field of climate science, such as the advent of AI, short-update cycles are crucial for incorporating the latest advancements into the production of the best possible climate information.

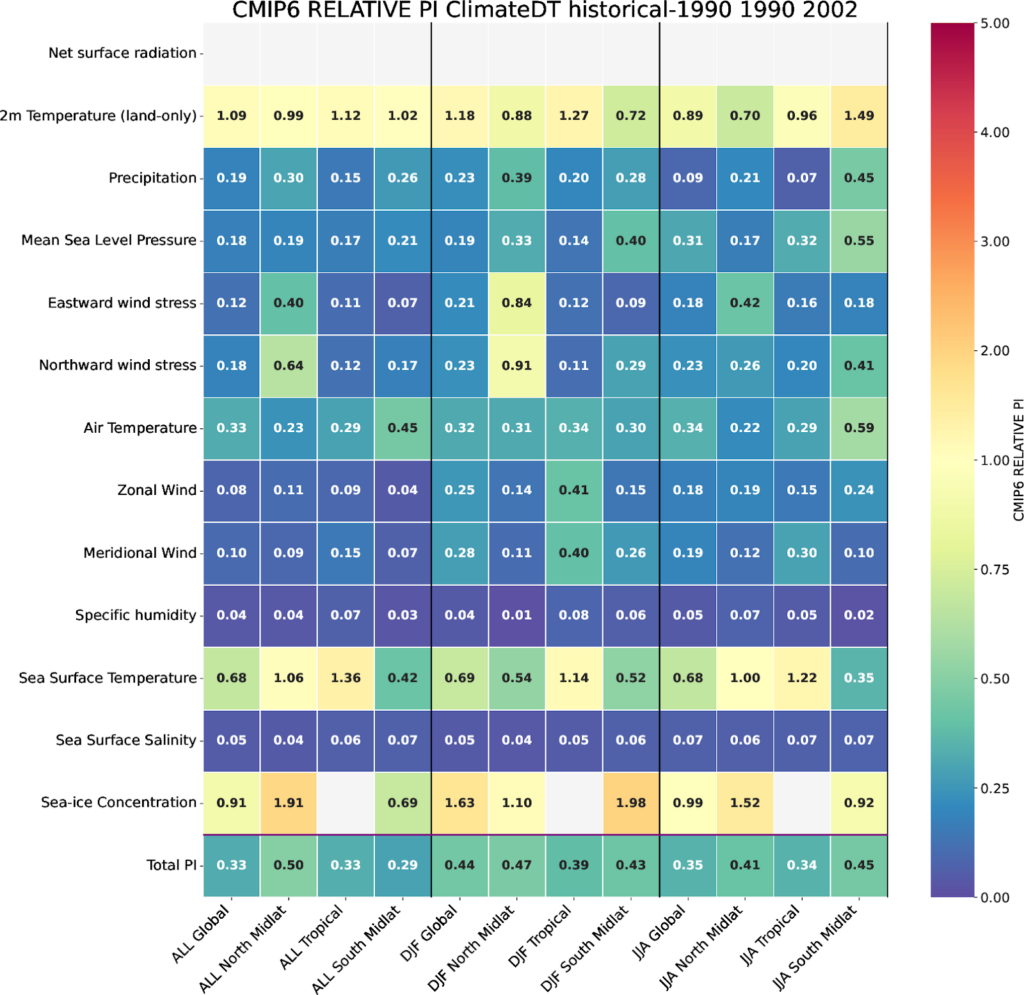

The regular production entails scenario projections for the period 2020–2050 following the emission scenarios defined by ScenarioMIP. Historical simulations starting in 1990 following a modified HighResMIP protocol, as well as long control simulations with constant 1950 forcing are used to evaluate model performance.

An important part of the operationalisation of multi-decadal projections is defining a common data portfolio and data governance following international standards. This implies providing the same parameters, in the same format, for all models, and all simulations, which is an important aspect of making the data usable. This ensures that once a user has adapted to the DestinE data formats, they can use new simulations from any of the participating models straightaway without further adjustments.

The simulation outputs as defined in the DestinE portofolio are transferred to the DestinE Data Lake, implemented by EUMETSAT and can be accessed via the DestinE Core Platform implemented by ESA. The data portfolio is not static and will be enriched with new variables and frequencies of interest according to the user requirements continuously identified.

Quality assurance, evaluation and uncertainty quantification

Another important aspect is to ensure that quality control measures are in place to continuously monitor the accuracy and reliability of the climate data produced. This implies applying a battery of tests to ensure consistency and completeness before making the data accessible to users.

It also implies a rigorous scientific evaluation of the climate simulations, performed in two phases. First, an exhaustive monitoring of the simulations, compared with observations (for historical simulations) or benchmark simulations (for projections) to assess their realism. This includes innovative diagnostics that make use of the full model output. For example, ocean eddies and tropical storms can be analysed with unprecedented detail given the wealth of variables, frequencies, and resolution available. In a second phase, the diagnostics focus on a deeper analysis documenting evaluation results relevant for climate adaptation.

This evaluation informs the evolution of the climate DT by identifying problems to be addressed and improved by model development. This continuous evolution process ensures that the climate DT is providing high-quality information fit for evolving user needs.

The climate DT combines this comprehensive quality assessment with a quantification of the main sources of uncertainty. Uncertainty quantification is needed to provide essential context to translate the information produced by the climate DT into policy decisions, adaptation strategies, and risk assessment for business plans.

Streaming earth system information to applications

The ClimateDT introduces a paradigm shift in the generation of information for climate adaptation. Most of the current climate information sources, including global and regional climate projections, lack flexibility to adapt the outputs to the users’ requirements. Moreover, the large model output volumes prevent storing the full information content. So, radically new ways need to be devised.

This motivates one of the key innovations of the Climate DT to address these challenges: the streaming approach. The streaming of the full model output, with all the variables available, at native spatial resolution and the highest frequency possible, gives users immediate access (on the HPC) to the complete set of climate data generated by the models.

Moreover, the Climate DT produces data in a standardised format across different models and model components (so-called Generic State Vector) and a unified grid equal-area hierarchical HealPix grid, optimised for hierarchical data exploitation as is required in high-resolution visualisation, local data retrieval and AI/ML training. This is already fast gaining traction in the community for efficiently working with very high resolution data.

From co-design to co-production of data

Selected applications were embedded in the Climate DT right from the start, through a co-production approach.

The co-production, an iterative process, allows the ClimateDT team to deepen their understanding of the user needs and to explore with users what can be done better with the novel information. This allows to better shape the digital twin and optimise the experimental set up of the continuous simulations. This process ensures the relevance of the data produced for the climate adaptation community.

New impact models and new sets of indicators that estimate climate-related variables relevant for key sectors such as renewable energies, water management, or agriculture can be incorporated between different simulations.

This strategy is also applied to develop bias-adjusted data directly from the model simulations for those impact models that require it.

The bias adjustment parameters are estimated and continuously refined using the model output from previous simulations. In this online context, this means that the impact model and the bias adjustment can be run simultaneously with the global climate model.

Why a Climate Change Adaptation Digital Twin?

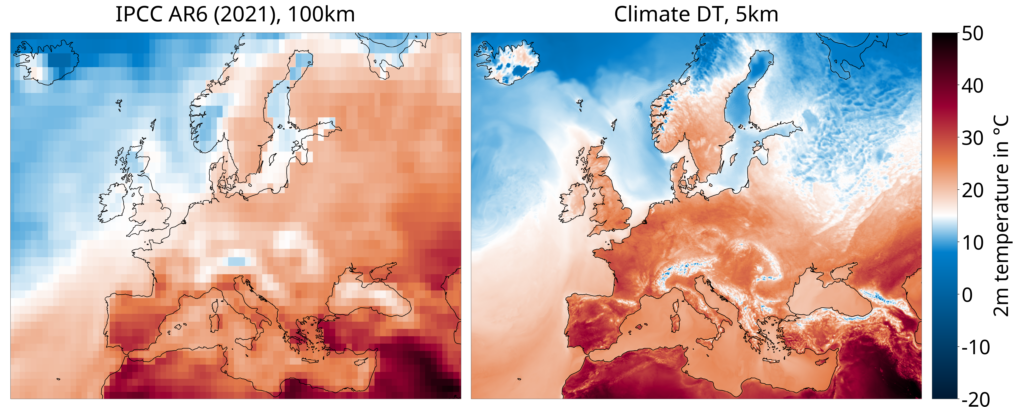

In the face of rapid climate change there is much yet to be known about climate change at the regional and local level, as highlighted by the Intergovernmental Panel on Climate Change (IPCC) in 2021.

The current global climate models are not able to explicitly represent and resolve a number of key processes like ocean eddies and deep atmospheric convection, nor their impact on the global circulation. This results in inaccurate or incomplete representations of key regional aspects which in turn, tends to impact the regional models used to adapt to regional and local changes in our climate.

The limited confidence in key aspects of how the climate is changing at regional and local scales shows a need for improved assessment of climate-related risks associated with both local and global processes, and in particular to integrate rapidly changing and unexpected risks or wildcards. In this context, climate information sources need to be timely and driven by societally-relevant questions, something that current practice, mainly driven by research efforts and curiosity, is not able to satisfy.

Bridging the gap requires new ways to generate climate information that go beyond traditional climate modelling and impact assessment. Estimating the changes in climate and its extremes reliably requires better models that take advantage of the increasingly richer amount of observations, computing, and scientific knowledge of physical processes. These new global models must credibly simulate climate on regional and local scales taking full account of both the remote and local processes that matter for the adaptation of climate-sensitive sectors.

Besides, the availability of increasingly powerful high-performance computing (HPC) systems and recent developments in model efficiency and scalability make it possible to perform climate simulations several decades ahead at resolutions where critical small-scale processes such as ocean eddies atmospheric convection start to be resolved.

Several groups worldwide are already developing successful prototypes of eddy-rich, storm-resolving climate models with a grid-spacing of less than 10 km. This provides more realistic simulations by reducing the dependence on subgrid parametrisations and better resolving the Earth’s topography. Most of these endeavours are research projects such as H2020 nextGEMS and Horizon Europe EERIE.

A digital twin of the climate system allows to simultaneously achieve better global models and produce interactive information for climate adaptation. It makes use of observations and integrates a climate model and impact-sector models for vulnerable sectors like energy resource management, all included into a technological solution that favours interaction. The twin allows the assessment of changes and their causes consistently across both local and global spatial scales and a range of time scales.

The co-design of digital twins with users from the impact-sectors most affected by climate change breaks the current paradigm of climate models with fixed and static flows of information. This approach shifts the provision of climate information for adaptation from the current sources that are often static, poorly funded and inadequate to react to user requirements in a more dynamic, operational and user-relevant way.

Furthermore, allowing the transformation of highly specialised climate data into user-relevant climate information and shifting the climate information generation to a transdisciplinary production mode are other key motivations for building a digital twin for climate change adaptation.

Further reading:

The fast development of DestinE’s Climate Change Adaptation Digital Twin

Destination Earth is a European Union funded initiative launched in 2022, with the aim to build a digital replica of the Earth system by 2030. The initiative is being jointly implemented by three entrusted entities: the European Centre for Medium-Range Weather Forecasts (ECMWF) responsible for the creation of the first two ‘digital twins’ and the ‘Digital Twin Engine’, the European Space Agency (ESA) responsible for building the ‘Core Service Platform’, and the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT), responsible for the creation of the ‘Data Lake’.

The Climate DT, procured by ECMWF is developed through a contract led by CSC-IT Center forScience and includes, Alfred Wegener Institute Helmholtz Centre for Polar a nd Marine Research (AWI), Barcelona Supercomputing Center (BSC), Max Planck Institute for Meteorology (MPI-M), Institute of Atmospheric Sciences and Climate (CNR-ISAC), German Climate Computing Centre (DKRZ), National Meteorological Service of Germany (DWD), Finnish Meteorological Institute (FMI), Hewlett Packard Enterprise (HPE), Polytechnic University of Turin (POLITO), Catholic University of Louvain (UCL), Helmholtz Centre for Environmental Research (UFZ) and University of Helsinki (UH).

We acknowledge the EuroHPC Joint Undertaking for awarding DestinE strategic access to the EuroHPC supercomputers LUMI, hosted by CSC (Finland) and the LUMI consortium, Marenostrum5, hosted by BSC (Spain) Leonardo, hosted by Cineca (Italy) and MeluXina, hosted by LuxProvide (Luxembourg) through a EuroHPC Special Access call.

More information about Destination Earth is on the Destination Earth website and the EU Commission website.

For more information about ECMWF’s role visit ecmwf.int/DestinE

For any questions related to the role of ECMWF in Destination Earth, please use the following email links: