Springer’s Journal of Big Data has recently published a paper featuring Polytope, ECMWF’s user-facing data service for Destination Earth digital twins data. The paper led by ECMWF’s scientist Mathilde Leuridan and co-authored by James Hawkes, Simon Smart, Emanuele Danovaro, Tiago Quintino (ECMWF) and Martin Schultz (FZ Juelich), presents Polytope’s new feature extraction. This functionality enables users to retrieve only the bytes of data they need from the vast volumes of weather and climate data produced by the Digital Twins.

Access Polytope on DestinE Platform

Why polytope

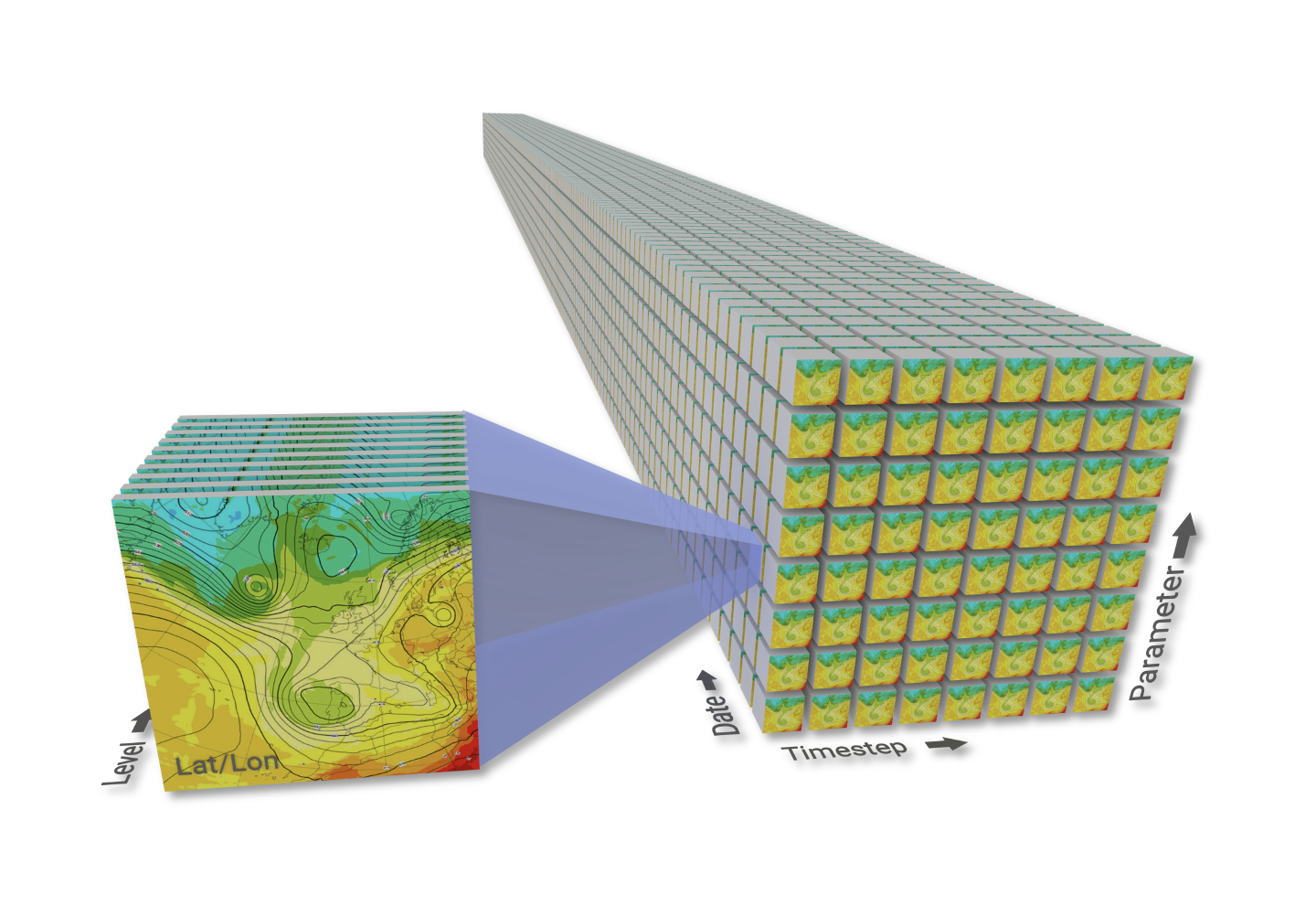

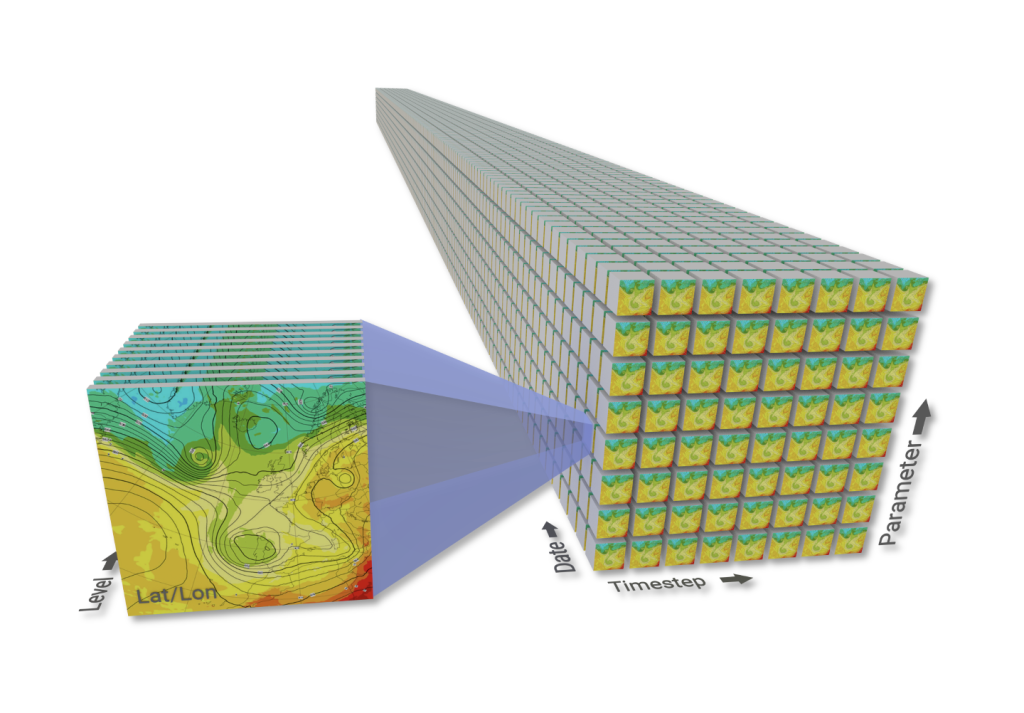

The European Union’s Destination Earth (DestinE) initiative is implementing high-resolution Earth system Digital Twins. With increased spatio-temporal resolutions, the Climate Change Adaptation and Weather-induced Extremes Digital Twins support efforts to better respond and adapt to climate change and extreme events, by providing globally consistent information at the scales where their impacts are observed. This resolution increase comes at the cost of ever-increasing data volumes. In DestinE, the Digital Twins produce global fields at km-scale resolutions, stored as massive multidimensional datacubes in the Destination Earth Data Lake.

Daily production in Destination Earth will exceed a petabyte per day. Therefore, it is essential that this vast flow of weather, climate and impact-sector data is handled, served, and tailored to the needs of downstream users as fast and efficiently as possible. ECMWF’s Digital Twin Engine (DTE) provides the software solutions and services that allow to manage, process, and access this deluge of DestinE data at native resolutions.

One of the solutions playing a key role in this regard is Polytope – which ensures that users can access the petabytes of digital twin data, at native resolutions, while being able to efficiently extract the specific bytes they need. Indeed, most users don’t want to access the whole deluge of data – they want to retrieve data specific to their use-case, whether that is a time-series for a particular city from a climate projection, or precipitation for a specific river basin from an extreme event simulation.

Bytes from petabytes

Polytope is the user-facing data service which allows to access and utilize the primary data, at native resolutions, from DestinE’s digital twins. It provides a secure, semantic data access to all the Digital Twin data included in the DestinE Data Portfolio, via the DestinE platform. It thereby provides seamless access to digital twin data stored in the distributed network of data bridges – part of the DestinE data lake – located at the EuroHPC sites where the digital twin simulations run.

Polytope allows to users access the data produced by the Digital Twins at native resolution and offers the capability to interpolate the global fields at coarser resolutions. Polytope also provides a novel feature extraction capability, developed in DestinE, which allows users to extract any arbitrary n-dimensional polygon (a “polytope”) from a datacube.

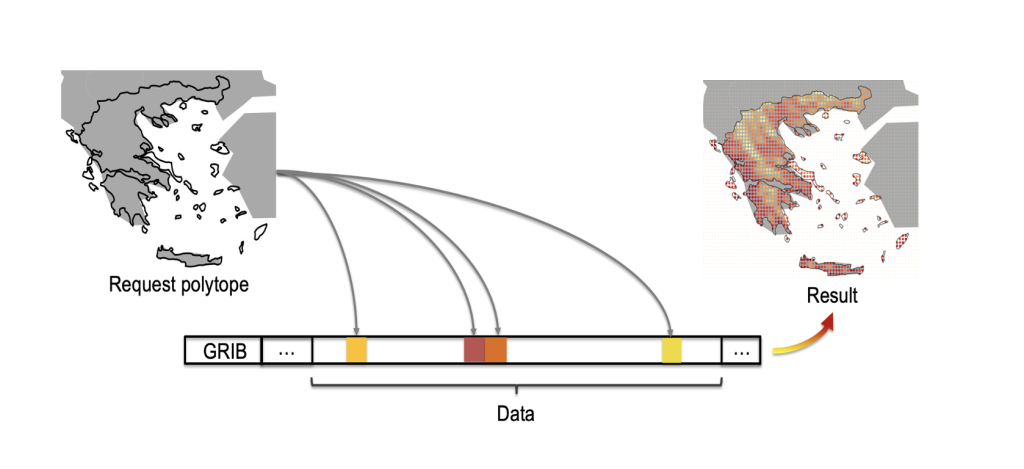

The feature extraction consists of a novel n-dimensional geometric stratification algorithm (polytope), which efficiently locates individual data points within the datacube; and a novel method of jumping to individual data points within a single field, even when that field is compressed and GRIB-encoded (a functionality we named GribJump).

This functionality was designed with one clear goal in mind – to read and retrieve only the minimum amount of data needed to fulfil a user’s request. This reduces the data transfer times, reduces I/O load, lowers client hardware demands, and eliminates the need for users to deal with excess data themselves.

Jump or twist

Many data storage systems designed for efficient access use chunking to organize their contents. Chunking can be highly effective when the chunk layout matches the access pattern, allowing data to be read in large contiguous blocks, and this has been successfully applied to many high-resolution datasets. However, optimal access for one type of query requires the datacube to be twisted into the correct orientation. For example, area-based queries perform best when the datacube is oriented along spatial dimensions, while time-series queries benefit from orienting the datacube along the temporal dimension. Even with an optimal layout, a polygon extraction such as in Figure 2 may still involve reading and returning more data than is strictly needed.

In weather and climate prediction, the combination of enormous data volumes, diverse datasets, and varied user requests makes it impractical to maintain multiple versions of the same datacube optimized for different query types. Furthermore, reorienting or transposing data after production introduces additional processing time, delaying its availability for operational workflows where timeliness is critical.

Polytope applies an alternative approach. Instead of twisting or chunking, it can jump directly to the required values in the original data layout. While this method trades some of the contiguous-reading advantages of chunked alignment, it provides consistent efficiency along any axis, supports complex non-aligned queries, and enables data to be accessed immediately as it is written without duplication or reorganization.

Polytope in practice

Polytope is flexible enough to extract any n-dimensional polytope from any n-dimensional datacube, which means it can support almost any kind of practical data extraction. To make this easier, Polytope provides a high-level interface for extracting common meteorological features, for example: regions (boxes and polygons), timeseries, vertical profiles and 4D trajectories.

1D Extraction

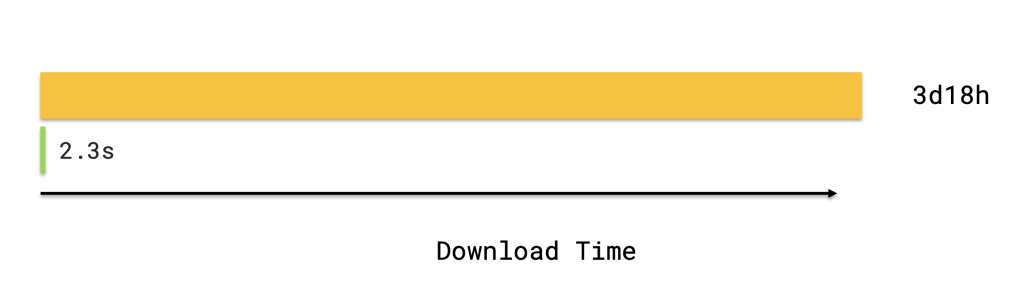

Polytope enables rapid extraction of point data from massive weather and climate datasets. This capability is crucial for localized climate analysis, where users need long time-series for specific coordinates rather than full global fields. Extracting a single point from a 20-year global climate projection at 5km resolution – such as those produced by the Climate DT – produces a 5.5 MB JSON in under five seconds on a standard home connection. The full global dataset for the same parameter totals about 770 GB and would take several days to download.

Although point extraction is a simple use of Polytope’s feature-extraction framework, its efficiency comes from the GribJump algorithm, which locates and retrieves the required values directly within GRIB-encoded data. No unnecessary bytes are read from disk, minimizing I/O overhead and allowing the server to service many concurrent users without performance loss.

2D Extraction

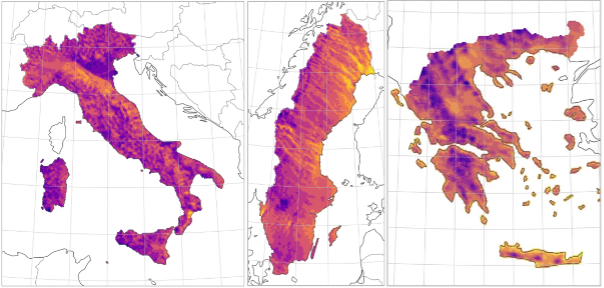

Regional analysis of weather or climate data often focuses on countries or other irregularly shaped domains. Polytope greatly reduces the data retrieval cost for such cases, especially when the target area deviates from rectangular geometry. For instance, Greece covers only about 24% of its bounding box, yielding a 76% reduction in data points when a user when uses the feature extraction instead of the bounding-box feature of Polytope. When multiple parameters and simulations steps are requested, these savings compound, significantly lowering transfer time and computational load.

Polytope not only minimizes data volume but also eliminates the need for downstream post-processing. The extracted output is already trimmed to the user’s exact domain and ready for immediate analysis. Behind the scenes, GribJump operates efficiently even for regional extractions, exploiting the largely contiguous spatial structure of the data to read the necessary byte ranges in sequence. This maintains high throughput while keeping server I/O minimal.

Higher Dimensions

Polytope’s full strength emerges when extracting a polytope of higher dimensions. While spatial or temporal extractions are straightforward, the same principles extend seamlessly to complex spatiotemporal requests. There is effectively no limit to the dimensional complexity a Polytope request can express.

For example, a three-dimensional shipping route, varying in latitude, longitude, and time, can be sampled as a single continuous trajectory. Extending this to four dimensions allows full flight-path extraction, incorporating altitude as an additional variable. Similar techniques apply to dynamic meteorological phenomena such as tropical cyclones, where the extraction region itself evolves and expands through time as the system grows.

Beyond physical trajectories, Polytope supports higher-order analyses across time and ensemble dimensions. Users can compare the evolution of successive forecasts for example by comparing the same lead time across successive forecast cycles, or by studying variability across ensemble members without downloading full fields. In each case, Polytope isolates precisely the required subset of the n-dimensional datacube, dramatically minimizing data movement, reducing post-processing burden and preserving full scientific fidelity.

Outlook to the future

Polytope has proven its efficiency and scalability in serving large-scale data from Destination Earth’s Digital Twins and is now routinely used through the DestinE platform. A series of enhancements are already underway. Among them, ECMWF is extending Polytope with advanced data discovery and federation features, enabling users to browse and access the full DestinE archive across all Data Bridges as a single, unified dataset—seamlessly integrating data from multiple centres.

Polytope will also be applied beyond DestinE, with plans to operationalize the service for ECMWF’s real-time forecast data in the coming year, bringing the same high-performance, on-demand access to data from ECMWF’s global forecasting systems.

Destination Earth is a European Union funded initiative launched in 2022, with the aim to build a digital replica of the Earth system by 2030. The initiative is being jointly implemented by three entrusted entities: the European Centre for Medium-Range Weather Forecasts (ECMWF) responsible for the creation of the first two ‘digital twins’ and the ‘Digital Twin Engine’, the European Space Agency (ESA) responsible for building the ‘Core Service Platform’, and the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT), responsible for the creation of the ‘Data Lake’.

We acknowledge the EuroHPC Joint Undertaking for awarding this project strategic access to the EuroHPC supercomputers LUMI, hosted by CSC (Finland) and the LUMI consortium, Marenostrum5, hosted by BSC (Spain) Leonardo, hosted by Cineca (Italy) and MeluXina, hosted by LuxProvide (Luxembourg) through a EuroHPC Special Access call.

More information about Destination Earth is on the Destination Earth website and the EU Commission website.

For more information about ECMWF’s role visit ecmwf.int/DestinE

For any questions related to the role of ECMWF in Destination Earth, please use the following email links: