The Application for QUality Assessment is an open-source software package that enables real-time evaluation and uncertainty quantification of the huge volume of data generated by the Climate Change Adaptation Digital Twin (Climate DT) of the European Commission’s Destination Earth initiative. Let’s take a closer look at the concept and main features of this innovative application, developed in an effort led by Italy’s National Council of Research (CNR) and Politecnico di Torino.

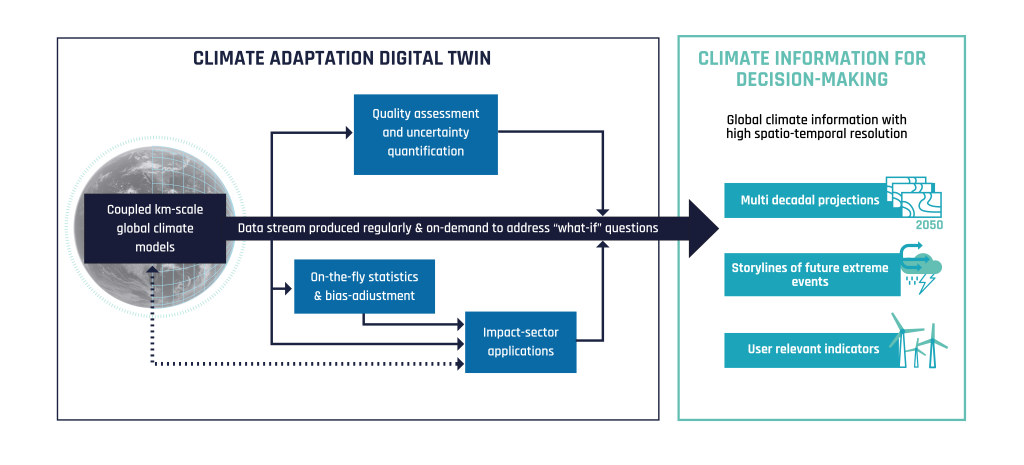

The Climate DT sets up a flexible operational framework for the production and tailoring of climate information on multi-decadal timescales. Monitoring the quality of the climate simulations, produced with three coupled climate models at km-scale, and assessing their uncertainties is an essential requirement for such a framework.

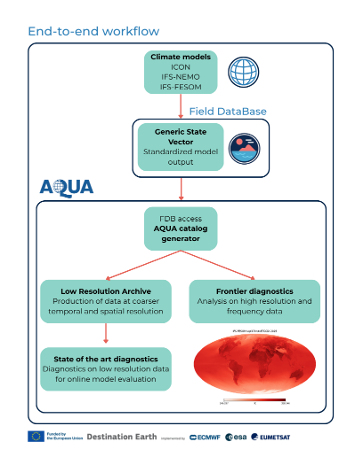

The Application for QUality Assessment (AQUA) – a software dedicated to real-time model evaluation and uncertainty quantification of the Climate DT simulations – is therefore a fundamental element of the Climate DT end-to-end workflow. AQUA is designed to assess the model robustness and trustworthiness by running a wide range of diagnostics and metrics to provide users, modellers and scientists the elements to understand, evaluate and possibly improve the quality of the simulated climate.

AQUA in a nutshell

AQUA is an open-source python3 package that provides an efficient, modular, homogeneous and flexible software infrastructure for accessing and preprocessing climate model output across various formats and data conventions, built on widely adopted python packages (dask, xarray, intake, etc.). Considering the large amount of data from the Climate DT simulations, it has been developed with performance in mind, enabling lazy-acces but most importantly scalable computations. Its design supports containerization and integration into automated workflows as well as user-defined pipelines, facilitating both operational and research-oriented applications. It supports a backend to provide data and plots for visualization, through a monitoring dashboard.

A code design simplifying the users’ tasks

The code of AQUA can be divided into two main sections: the AQUA framework part, where the data access and processing steps are performed, and the diagnostic part, where the actual diagnostic-specific analysis delivers plots and processed data to its users.

AQUA hides the details and the difficulties associated with accessing a broad range of datasets in different formats by using a catalog system based on intake. These catalogs support FDB GRIB as well as Zarr and Netcdf, and they include information on data properties, from the data location up to the grid specifications.

Once catalogs are configured, the user experience to access the data is seamless, with just a few lines of code. AQUA also supports polytope, which allows remote access for digital twin data available in the DestinE Data Lake.

Once the data is lazily loaded as an xarray object, the AQUA framework provides a series of methods to manipulate it which appear as natural extensions of xarray. For example, it provides seamless out-of-memory regridding capabilities. This is achieved with a specific backend which relies on CDO-generated weights. Other features include area-weighted spatial averaging, vertical interpolation, temporal averaging and detrending. However, the most important capability of AQUA’s framework is the possibility to fix, convert and homogenize metadata of variables, dimensions and coordinates. This provides to users data which is always coherent among observations and Climate DT models.

The second key feature of AQUA is its diagnostics suite, which includes a dozen of different diagnostics providing a wide range of metrics to assess the reliability of the simulations. These range from simple metrics, such as time series, seasonal cycles and bias estimates, to more complex metrics, such as performance indices score cards, and process-based diagnostics, for example teleconnection indices.

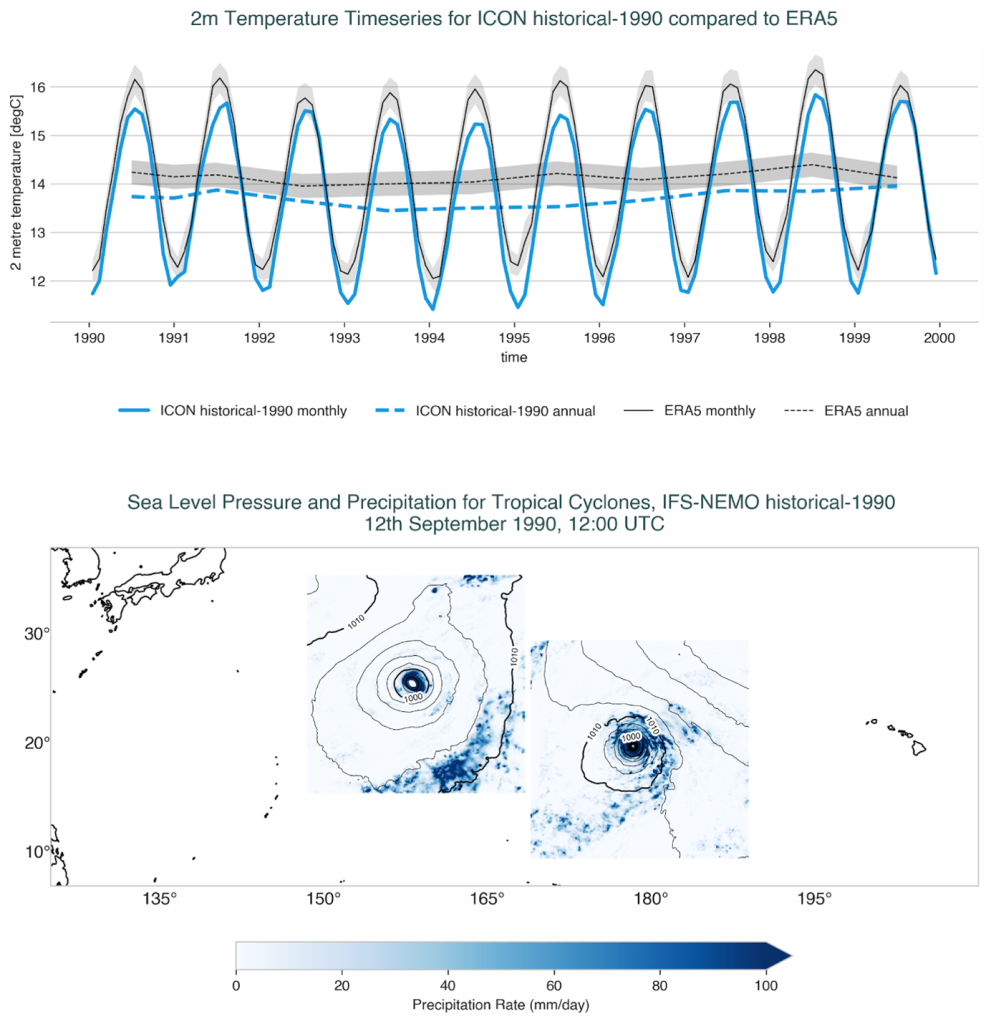

In order to save computational resources, those diagnostics are meant to run on a data-reduced version of the simulation, known as Low Resolution Archive (LRA). LRA is an internal AQUA data resource representing a monthly low-resolution archive of data, and it is produced by AQUA for each Climate DT simulation, leveraging all the AQUA core capacities. On top of these more “standard” diagnostics, AQUA can also provide advanced diagnostics that run directly on the native data, at high temporal and spatial resolution – named frontier diagnostics – which are able to provide information on specific features of Earth’s climate, such as the probability density function of tropical rainfall or tropical cyclone tracking.

Integrated in the end-to-end workflow of the Climate DT

The integration of AQUA within the Climate DT end-to-end workflow is fundamental to achieve timely evaluation of climate model simulations. For these reasons, AQUA is containerized and designed to provide a clear API to interact with the rest of the system and to run efficiently in the EuroHPC environments where the Climate DT simulations run.

For each new simulation, AQUA catalogs are generated automatically allowing automatic data access by the workflow and the users interested. Through this access, when a new month of simulation is completed on the EuroHPC systems, Lumi and MareNostrum5, the AQUA framework is called to compute and store the LRA.

Once the LRA is produced, an integrated analysis is run by the workflow by executing in parallel all the AQUA diagnostics, producing a large set of data delivering a snapshot of the status of the analysed climate simulations: multiple aspects of the atmospheric circulation, of the ocean and sea ice status are considered at the same time.

Diagnostics provide comparisons against reanalysis (e.g. ERA5) as well as global gridded observations (e.g. ESA-CCI, CERES), delivering also a set of compact metrics to estimate the degree of realism of the simulated climate. This is especially relevant for historical present-day simulations which are used to validate the Climate DT system but can provide useful information also for future scenarios.

Bottom: precipitation rate (shading) and mean sea level pressure (contours) in the surroundings of two tropical cyclones in the Pacific Ocean from the IFS-NEMO historical simulation performed in Phase 1 of the ClimateDT. The two typhoons can be detected while the simulation is running so that only high resolution data in their surroundings is stored for climate analysis.

Figures condensing the most relevant information are then stored online providing the backend for the Climate DT dashboard which is currently under development.

This complex but highly efficient workflow is run for every new monthly chunk of the simulations and offers a robust yet transparent method to assess their quality.

The analysis is run independently on each simulation (each realization, when available) from each model to provide a scalable pipeline. Once simulations are concluded, it is possible to exploit AQUA tools to produce ensemble statistics, which provides an immediate measure of the uncertainty of the Climate DT system.

Overall, AQUA provides a highly efficient and modular tool to provide comprehensive and real-time evaluation of the Climate DT simulations, aiming at continuously improving their trustworthiness and reliability.

The Climate DT, procured by ECMWF is developed through a contract led by CSC-IT Center forScience and includes Alfred Wegener Institute Helmholtz Centre for Polar and Marine Research (AWI), Barcelona Supercomputing Center (BSC), Max Planck Institute for Meteorology (MPI-M), Institute of Atmospheric Sciences and Climate (CNR-ISAC), German Climate Computing Centre (DKRZ), National Meteorological Service of Germany (DWD), Finnish Meteorological Institute (FMI), Hewlett Packard Enterprise (HPE), Polytechnic University of Turin (POLITO), Catholic University of Louvain (UCL), Helmholtz Centre for Environmental Research (UFZ) and University of Helsinki (UH).

Destination Earth is a European Union funded initiative launched in 2022, with the aim to build a digital replica of the Earth system by 2030. The initiative is being jointly implemented by three entrusted entities: the European Centre for Medium-Range Weather Forecasts (ECMWF) responsible for the creation of the first two ‘digital twins’ and the ‘Digital Twin Engine’, the European Space Agency (ESA) responsible for building the ‘Core Service Platform’, and the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT), responsible for the creation of the ‘Data Lake’.

We acknowledge the EuroHPC Joint Undertaking for awarding DestinE strategic access to the EuroHPC supercomputers LUMI, hosted by CSC (Finland) and the LUMI consortium, Marenostrum5, hosted by BSC (Spain) Leonardo, hosted by Cineca (Italy) and MeluXina, hosted by LuxProvide (Luxembourg) through a EuroHPC Special Access call.

More information about Destination Earth is on the Destination Earth website and the EU Commission website.

For more information about ECMWF’s role visit ecmwf.int/DestinE